How AI Creates Even More Legal Work For Lawyers

We all know that AI can draft documents faster, summarise cases in seconds, and automate routine tasks. But these capabilities only affect the outer layer of legal practice. They do not address what happens when technology changes reality itself and forces the law to respond.

The truth is that AI destabilises the assumptions the law relied on. And that destabilisation creates even more legal work for the lawyer.

How AI Breaks the Trust Shortcuts the Law Depended On

For most of legal history, systems worked because people relied on trust shortcuts. A familiar voice on the phone suggested identity. A video implied physical presence. A document suggested authorship and authenticity.

These cues were never perfect, but they were reliable enough to support commerce, employment decisions, governance, and enforcement.

AI breaks all of these shortcuts at once.

With voice cloning systems, deepfake videos, and generative text tools, deception no longer requires access, proximity, or specialised skill. It is cheap, fast, and scalable.

As a result, impersonation, fabricated evidence, and synthetic authority are no longer rare events, but structural risks. When decisions are made based on false signals and harm follows, the question of responsibility becomes unavoidable.

And that question is where affected parties will be consulting lawyers who are fluent in AI-related issues.

Here are some of them:

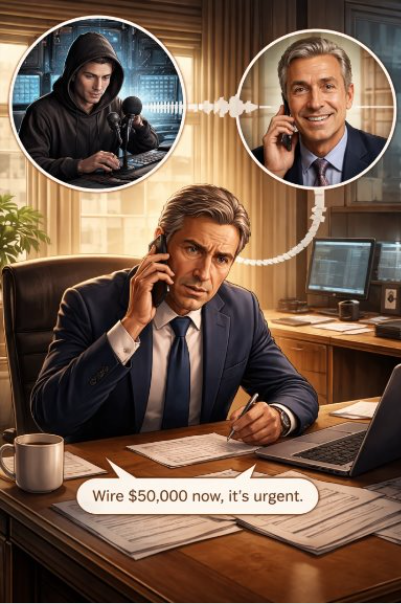

1. AI Fraud and Impersonation Creating Authority

Here AI is used to replicate a real person’s voice, face, writing style, or authority in order to induce others to act on instructions that appear genuine but are entirely false.

Consider a finance executive who receives a voice message that sounds indistinguishable from the CEO, uses familiar phrasing, mirrors past urgency, and references an ongoing transaction. Funds are transferred. The instruction was fabricated. At that moment, the issue is no longer simple fraud.

Legal questions arise immediately:

- Was reliance reasonable in an environment where such deception is technically possible?

- Were internal controls designed for an AI-enabled threat model?

- Should the company absorb the loss, or should the bank have intervened?

- Does insurance respond when the fraud method did not exist when the policy was drafted?

Directors and senior management face scrutiny over oversight, governance, and anticipatory risk management.

These are not technical questions, but legal judgements about reliance, reasonableness, controls, and liability. Lawyers are involved early because every response affects downstream exposure.

2. Evidence Where Authenticity Cannot Be Assumed

The same destabilisation now affects evidence.

Courts, regulators, and employers operate in an environment where visual and audio material can no longer be trusted on appearance alone. Videos can be fabricated. Audio can be cloned. Documents can be generated instantly and tailored to context.

The central legal question has changed. It is no longer whether evidence is persuasive. It is whether it is real at all.

Lawyers must challenge authenticity, explain technical uncertainty without overwhelming decision-makers, and slow institutions that are under pressure to act quickly.

Acting too fast can cause irreversible harm. Acting too slowly can allow damage to spread. This work depends on judgement under uncertainty.

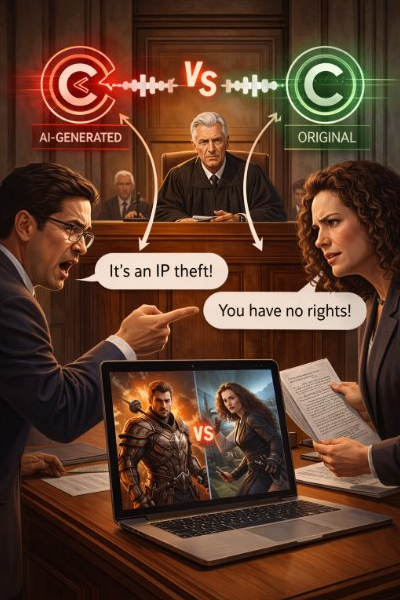

3. Intellectual Property in an Age of Accidental Exposure and Blurred Causation

AI has also transformed intellectual property risk.

Employees paste confidential information into AI prompts without malicious intent. AI outputs resemble protected works without deliberate copying. Training data may include copyrighted material without the owner’s consent.

The result:

- Businesses struggle to assess exposure.

- Rights holders want remedies.

- AI platforms seek to limit liability.

- Employers want policies that reduce risk without freezing productivity.

- Investors and acquirers want to know whether past AI use creates future liability that affects valuation.

These problems cannot be solved by technical controls alone. They require legal analysis of responsibility, causation, and risk allocation in situations where intent is unclear and boundaries are blurred.

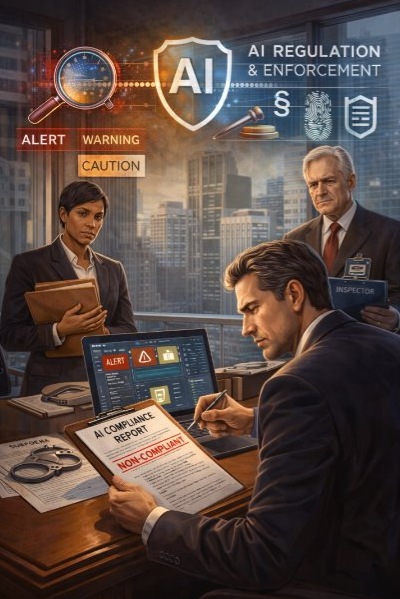

4. Regulation and the Expansion of Preventive Legal Work

Regulation by the authorities governs behaviour around AI’s deployment and use.

As such:

- Businesses often do not know whether their systems fall within regulatory scope.

- Regulators issue notices, information requests, and audit demands.

- Disclosure obligations are ambiguous.

- Penalties can be significant.

The above then creates sustained advisory work.

Lawyers interpret unclear obligations, structure governance frameworks, manage regulatory responses, and help organisations adjust behaviour before enforcement escalates.

This work begins long before litigation and continues well after initial compliance decisions are made.

5. AI-Caused Harm and the Reallocation of Responsibility

AI-driven systems already cause real physical, financial, and operational harm, even when no human actively intends the outcome.

Autonomous vehicles (smart cars or self-driving cars) knock down pedestrians. Automated trading systems trigger sudden losses. AI-driven decision tools deny services, terminate employees, or escalate enforcement actions without clear human oversight.

A machine cannot be punished, sued, or held morally responsible. Responsibility must attach to a human or an organisation, even when the harmful act was generated by a system operating without direct human input.

For example, where an autonomous vehicle is involved in an accident:

- The manufacturer argues the system was properly designed.

- The owner claims it was maintained correctly.

- The deploying party points to the software vendor.

- The integrator says the configuration followed specifications.

Each party claims distance from the final act.

The legal question is not who pressed a button, but who had control, who created the risk, and who should reasonably have foreseen the harm.

The same pattern appears in non-physical harm.

For example, where AI fraud detection system freezes accounts automatically and customers suffer losses:

- The business claims it followed industry standards.

- The vendor of the AI system says the model worked as designed.

- Regulators ask why safeguards failed.

Lawyers analyse the delegation of decision-making, duty of care, and whether reliance on automated outputs was reasonable.

Thus AI fragments responsibility. And the law exists to reassemble it.

The Reality Facing the Legal Profession

AI will reduce low-value legal work. That outcome is inevitable, and that work was never defensible as a long-term foundation for the profession.

At the same time, AI dramatically increases high-value legal work involving uncertainty, judgement, and consequences that cannot be reversed.

Lawyers who cling to narrow, task-based roles will feel threatened. Lawyers who understand how AI changes risk, responsibility, and decision-making will be pulled deeper into advisory, governance, and dispute-shaping roles.

AI is not the end of law. In fact, it expands it.

And now lawyers who are AI-fluent and understand how AI affects industries have even more legal work to do, which increases their earnings even more.

Sen Ze is a lawyer, entrepreneur, and best-selling author known for his work at the intersection of law, technology, and entrepreneurship. With decades of experience spanning legal practice, business building, and technology adoption, he focuses on how emerging technologies change risk, responsibility, and professional decision-making.

He is the author of "The Intelligent Lawyer" book, which is a foundational text for practising lawyers to earn more without putting in extra time and effort or burning out. He is also the creator and presenter of the "AI & The Law" lecture series at BAC College, which examines how artificial intelligence generates new legal work for lawyers. His work is grounded in real-world legal consequences rather than theory, with a strong emphasis on judgement, accountability, and governance.

As an entrepreneur and author, Sen Ze has helped thousands of professionals understand how technological advancements change careers, businesses, and value creation. He is known for explaining complex ideas in clear, practical language and for preparing lawyers to move beyond routine work into higher-value advisory and strategic roles created by AI-driven uncertainty.

"The Intelligent Lawyer" can be purchased at https://bacstore.my/products/the-intelligent-lawyer now.